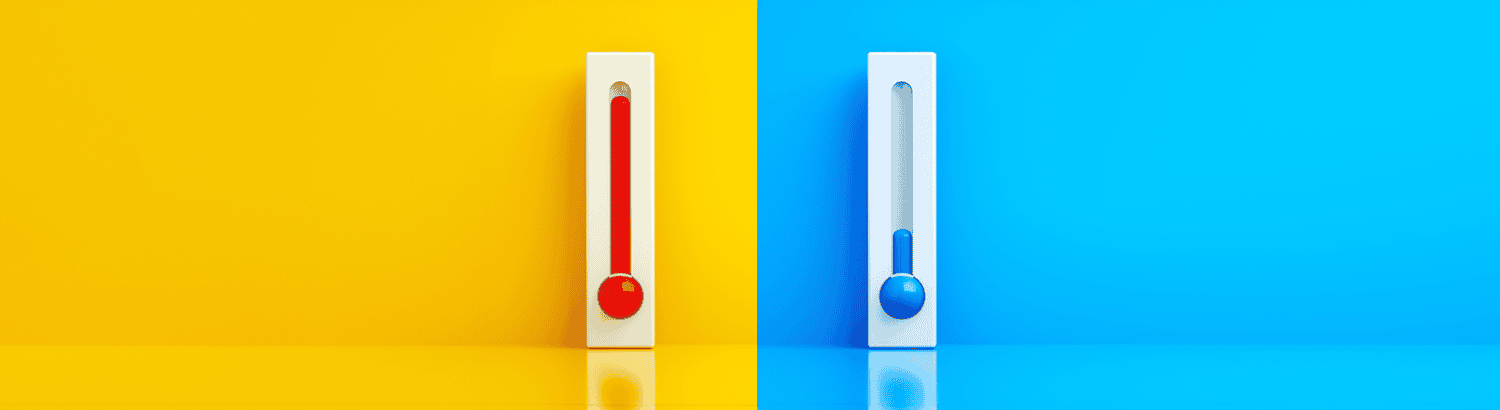

Hot, Cold, or Warm? What Is the Best Disaster Recovery Site?

A disaster recovery (DR) site is where your IT applications and data may need to run temporarily in a disaster scenario. In the past these sites consisted of physical servers, and the concepts of hot, cold, or warm were more literally referring to whether the equipment was even turned on or to what capacity some of the DR site capacity was powered on and ready to recover. Today, with virtualization and cloud computing, the definitions are not so clear. As someone who has been in the disaster recovery business for 25 years, I will provide some of my insights on what these terms mean today.

How a Hot Disaster Recovery Site Works

When I started in the disaster recovery industry, I worked on a product that replicated data in real time from one physical server to another physical server that was fully powered on. In the event of a disaster, that DR server could quickly start up any applications required and, with some quick networking updates, take the place of a failed production server. This was considered a hot site along with similar sites that kept DR servers fully powered on and ready to go or that even kept DR servers in lockstep for fault tolerance.

Today with virtualization, virtual machines do not necessarily need to be running on the DR site, but it is a good idea to make sure the physical machines that are the hosts are kept running. This may blur the lines between hot and warm sites, but the concept of all of the physical data center being powered on may still qualify as a hot site. It is also possible to have these virtual machines fully powered on and receiving replicated data using agents, making it a fully hot site.

Pros of a hot DR site:

-

- Recovery time is faster, especially when the only thing that needs to be started is an application. Starting an entire virtual machine takes longer but not as long as also having to boot the physical host it runs on.

- Data can be replicated directly to where it is needed in a running environment and does not have to be relocated again for recovery.

- Powered-up machines can be easily patched and updated at any time rather than having to power them on for maintenance.

Cons of a hot DR site:

-

- It takes a lot of power and cooling to run a hot site, especially if the DR site matches the capacity of the production site. It is not the most sustainable option and not inexpensive.

- By virtue of being powered up and online, the DR resources are vulnerable to cyberattack, increasing the attack surface and potentially creating more vulnerabilities if not regularly maintained.

How a Cold Disaster Recovery Site Works

As you might expect, a cold site is the opposite of a hot site with resources powered off until needed. Imagine a data center without blinking lights and whirring fans. In the event of an emergency, physical servers and storage are powered on and then recovery takes place. For a truly cold site, the primary concern is: how does the data arrive for recovery? It could be transported physically in the form of tapes or connected remotely over a network. The biggest challenge of a cold site is the recovery time, because not only do the resources all have to be powered on, but the data recovery can take days or weeks to bring into the cold site.

Cold sites rarely exist these days for disaster recovery because of the long recovery times. I would argue, though, that one form of a cold site could be defined within public cloud computing. Replicating virtual machines and data to or even within a public cloud can have some of the same characteristics of a cold site with none of those workloads running until needed. Only the data is arriving and waiting to be converted into running virtual machines when the need arises.

From a cloud subscription model, this could be considered a cold site because there is no cost until all of the resources are provisioned in the event of an emergency. There’s no cost except the storage and ingress of data, at least.

Pros of a cold DR site:

-

- There is low to no power consumption—nothing needs power or cooling until the need arises.

- It cannot be hacked while in a cold state.

Cons of a cold DR site:

-

- Recovery time is slower due to waiting for power up and data recovery.

- It’s hard to keep systems maintained with software and security updates as they need to be powered on for each update.

How a Warm Disaster Recovery Site Works

A warm site can really be anything on a spectrum between hot and cold sites. I would argue that the majority of DR sites fall into the “warm” category, or if not, should. With virtualization and cloud computing, a disaster recovery site should be using only a fraction of the power of a production site, and resources are kept in a “warm” state where they can be started up very quickly with data that has been replicated to that site.

Warm sites can range from the scenarios I mentioned earlier such as having an entire virtualization infrastructure running but without the VMs powered on, or where only the storage is powered on at the DR site receiving the replicated data but the rest of the infrastructure is cold. The key component of a warm recovery site is that the data exists in an accessible state so that the recovery can happen quickly, even if not quite as fast as a hot site provides.

Pros of a warm DR site:

-

- Recovery times are much faster than cold site recovery, even if all components are not powered on yet.

- As with a hot site, any resources powered on can be more easily managed. In virtualization and cloud environments, having the virtualization layer on allows for easier management and maintenance of those components.

Cons of a warm DR site:

-

- Recovery speeds can vary depending on how warm or cold it is, and the more you have powered on, the more the power consumption and cooling requirements.

- Those powered-on components are online and susceptible to cyberattacks.

Factors to Consider When Choosing a DR Site

Understanding these concepts of hot, warm, and cold sites is a good foundation but here are some additional factors to consider when planning disaster recovery sites.

Dedicated or Mixed-Use DR Sites

It is easy to think of DR sites as these data centers dedicated solely for DR and simply standing by until a disaster recovery is needed. This is certainly a valid type of DR site. Even when using the cloud disaster recovery site, your organization may contract with a cloud provider solely for the dedicated purpose of DR. But there is nothing that says it can’t also be used for IT production uses like running production workloads, storing production data, and also operating in the capacity of a DR site.

If you already have multiple business sites, it may make sense to distribute production workloads across sites. This way, if a disruption hits one site, it only affects the applications and data running at that site and the other sites can continue as normal (or close to normal). Even with just two sites, it may make sense to have both sites running production workloads and each site have the added capacity to act as a DR site for the other site, so that if either site goes down you can run everything at the other site until the downed site is brought back online.

Of course, there can be many variations of this concept with varying amounts of production running across varying sites, but it means incorporating your hot/warm/cold sites into existing production sites so that you don’t have to add an additional dedicated DR site to the mix.

Complex DR Architectures

The basic concept of DR often depicts a single DR site for a production site. We call this the one-to-one architecture, and it is at the very least the starting point for all DR architectures. But don’t let anyone tell you that this is a limit.

You may want or even need to have multiple DR sites for your production site so that your applications and data have twice the protection. We call this the one-to-many architecture and the “many” can be two or more sites where you want your data protected.

Of these many sites, they can be a combination of hot/warm/cold sites as fits your DR needs. For example, an organization may want both a hot and cold site for disaster recovery so that in a disruption they can recover to the hot site quickly, but if for whatever reason the hot site was compromised, they could recover to the cold site as a last resort. It could also be a similar scenario with a hot site and a warm site, two warm sites, a warm and cold site, or any combination that provides the desired protection.

Just as you could have many DR sites, you could have many production sites and protect them to a single DR site. We call this the many-to-one architecture, and it can consolidate and simplify the recovery process by having a dedicated team at a single site recover multiple sets of applications and data. Because that one DR site is recovering many production sites, it may change the consideration of whether that site should be hot, warm, or cold.

In larger organizations with many sites, you can end up with even more complex architectures—with many production sites being protected to many DR sites in multiple ways—but no matter how complex it gets, the concepts of hot, warm, and cold sites should be considered.

Business Priorities through Tiering

In planning for disaster recovery, any organization must determine a recovery time objective (RTO) and a recovery point objective(RPO). These determine how quickly the organization should recover and how much data it can afford to lose. They can be measured in any range from seconds to days or even weeks or recovery time or data lost. While it is tempting to think of these as being simple monolithic measurements for an entire organization, it is rarely so simple. Instead, an organization must determine varying RTOs and RPOs depending on the role of applications and their data in the business.

Organizations often tier their applications and data. They begin with a first tier that groups critical operational applications and data needed for the business to run in a minimal capacity. These are set as tier one. A second tier will be comprised of applications that are important but can be recovered at a later time after the critical tier is recovered. Then, any additional applications and data can be grouped on third or lower tiers to be recovered later. These tiers can be relatable to the hot, warm, and cold DR site types, as the recovery times for those sites vary.

Having these varying tiers and RTOs means that a DR site may ultimately consist of a combination of hot, warm, and cold areas to meet the recovery needs of the tiered production site workloads. While having a combination of hot, warm, and cold at a DR site could just put that site under the definition of “warm,” the concepts of each type still apply to those specific configurations. There are no hard rules here, and nothing prevents a site from being in all three categories in how it is providing recovery for multiple workloads or multiple productions sites being protected to it.

Which Disaster Recovery Site Is Right for You?

While I hope you can appreciate all the complexities and nuances of these terms and how they apply across many different possible architectures, I also encourage you not to overthink things. By defining those RTOs and RPOs during your disaster recovery planning, you’ll have a good idea of what your organization requires for recovery and can choose the DR site and DR solution that meets those requirements. Whether it is hot, warm, or cold isn’t as important as whether or not it is right for you and your disaster recovery plan.

At HPE and Zerto, we want to provide you with the best technology available, including disaster recovery services and data protection solutions with RTOs and RPOs measured in seconds and all the hardware and management services you need to build out DR sites from hot to warm to cold. So wherever your DR thermometer reads, HPE and Zerto are here to help.

Learn more about using Zerto as your DR solution. Or, try out Zerto for yourself and see just how easily and quickly workloads can be recovered in our on-demand, hands-on labs.

Frequently Asked Questions

What Is the primary site in disaster recovery?

A primary site is the production site where all the IT operations are run (services, applications, data storage, etc.). This is the site that usually needs to be protected in case of any major unplanned disruption such as a natural disaster, a man-made disaster (ransomware, software update, etc.), or any planned event such as a datacenter migration or consolidation.

In some instances, the “primary” site is actually made of multiple datacenters in different locations.

What is a disaster recovery site?

A disaster recovery site is a secondary site from which you can recover your IT environment and run your IT operations in case of a major disruption on your primary, or production, site.

Based on your DR implementation, your secondary site can be on-premises (usually a co-location), in the cloud, in-cloud (if your primary site is already in the cloud), or set and managed by a MSP (managed service provider) as a service (DRaaS).

Disaster recovery sites can also be dedicated DR sites or being used for both DR and production purposes.

What are the main architectures for DR sites?

There are three main types of architecture, or topology, for DR sites.

First, the one-to-one architecture depicts a single DR site for a single production site. It is the most basic and common architecture.

Second, the one-to-many architecture considers multiple DR sites, from two to more sites. These sites can be a combination of hot/warm/cold sites.

Third, the many-to-one architecture is based on having multiple production sites protected by one DR site.

David Paquette

David Paquette